%20copy.jpg)

Now that the potential of large language models (LLMs) is known by everyone and their mom, the language model community is quickly moving towards small language models. The progress has been driven by the efforts to reduce the computational cost of LLMs, so that everyone could have access to this inspiring technology.

Notable trends in reducing the memory and computation cost needed by language models include quantization of large models and knowledge distillation into small models. There is also important work done to optimize model run times on CPUs, which further increases the breadth of possible LLM applications. But perhaps more about those later..

In this post, I’d like to highlight two interesting recent reports from the language model space, with some results I got!

The first one is MEGABYTE, a neural network architecture for generative modeling. It’s a new type of a transformer model that has some interesting properties. MEGABYTE is rather resource efficient and scales to very long prompt lengths.

Perhaps more interestingly, MEGABYTE works directly on sequences of bytes. This means you don’t need to tokenize text before feeding it to a model, which is a pain point of current LLMs. Thanks to working directly on bytes, you can use the MEGABYTE architecture to deal with other modalities like audio and images, in addition to text.

The other interesting development is the TinyStories dataset. It may not be a huge surprise, but the authors found they were able to train really good SMALL language models, when they restricted the vocabulary and theme of their dataset. This is very relevant information for minority languages, like Finnish, where training data availability is limited.

The TinyStories authors also released an instruction-tuning dataset! With instruct-training, their tiny model is able to create stories on command, based on user-requested features. It’s cool how these abilities emerge even in smaller models.

Combining these findings, we can try to train generative language models from scratch on a laptop. Which is what I did, and trained MEGABYTE on the TinyStories data! Thanks lucidrains for open-sourcing your METABYTE implementation!

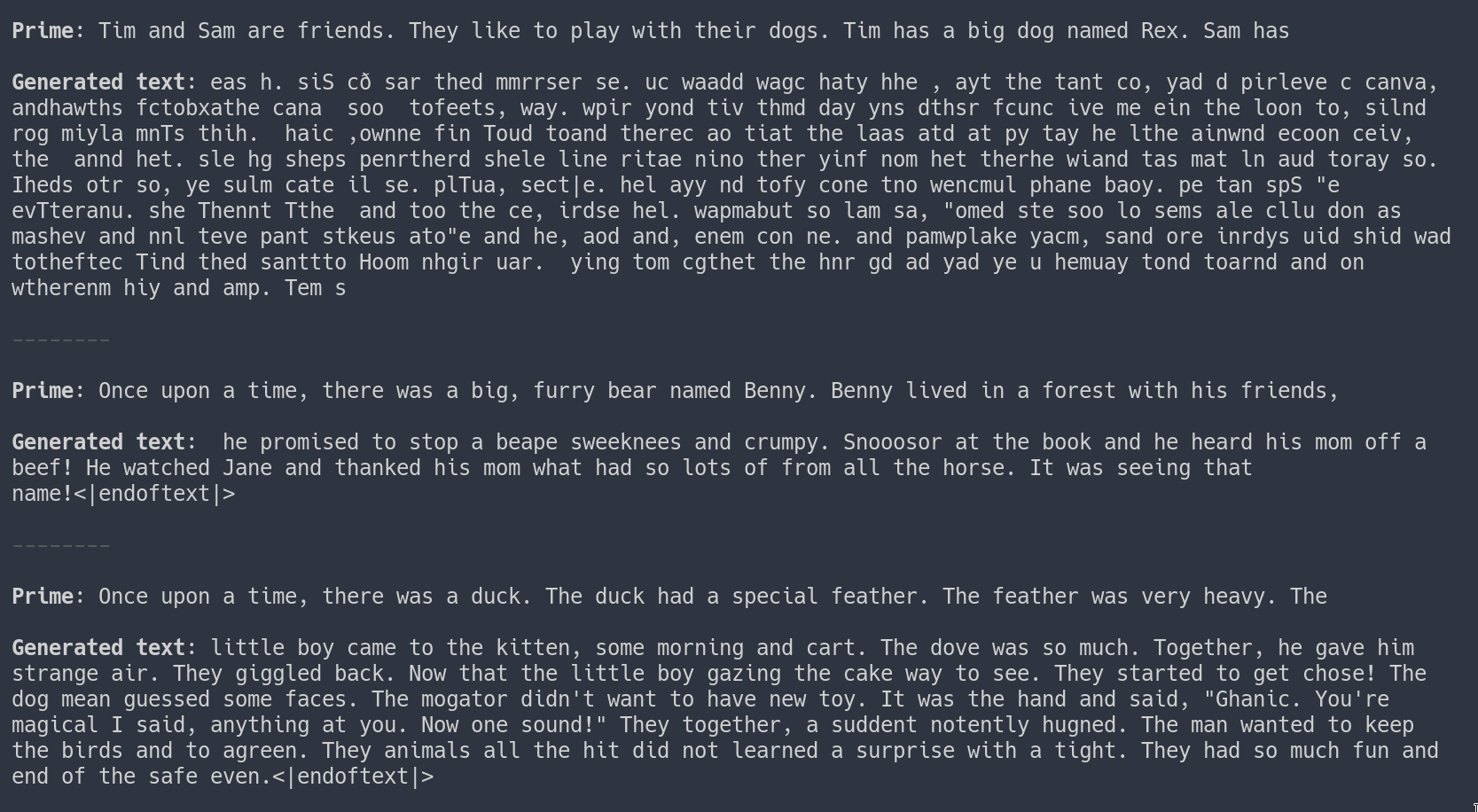

In the attached images you can see how the generated text looks after a few hours of training a 40M parameter model on a laptop. That is an M, not a B. So the size of the model is much smaller than the currently popular billion-parameter monsters. The Tiny Stories authors trained even smaller models, but recall that MEGABYTE can be more efficient per parameter.

I’m still learning to use MEGABYTE myself, and working to develop intuition on how the different parameters affect the end result. Happy to chat about it :D

I can’t wait to see how the model looks like after some more training, and I’m especially looking forward to fine-tuning models on the TinyStories instruct data. More about that in the future :)

Also, stay tuned for the code!

MEGABYTE: https://arxiv.org/abs/2305.07185

MEGABYTE-pytorch: https://github.com/lucidrains/MEGABYTE-pytorch

TinyStories: https://arxiv.org/abs/2305.07759

When it comes to potential tiny specialized language models applications, it comes in handy that they can be run on personal hardware or in a cloud environment hence increasing privacy of your data. We understand very well the importance of privacy and security when it comes to handling your enterprise data, that is why we have recently launched YOKOT.AI — a Generative AI solution for Enterprise use.

%20copy.jpg)