%20copy.jpg)

Most teams live inside spreadsheets, CRM exports, and SQL databases. These datasets hold real business signals, like churn patterns, sales cycles, user retention, but large language models still struggle to analyze them. They were trained for natural language, not structured data. When you paste an Excel sheet into a chatbot, it won’t see the relationships that matter or find the insights that drive decisions. Here we show how Softlandia’s agentic AI fixes that gap.

This blog post dives into how language models handle structured, tabular data, exploring the unique challenges they face and the cool benefits they offer. We’ll look into practical ways these AI tools can help us make sense of numbers and tables, using non-trivial tabular data with free text columns as an example. A component for understanding tabular data is an integral part of a solid AI engine after all - and you'll want one if you plan to fully utilize generative AI.

Understanding and acting on tabular data, such as spreadsheets and comma separated values, remains challenging for large language models (LLMs). LLMs lose context and accuracy when working with tabular data for two reasons.

- Context limits: even long-context models can’t reliably process dense data where every cell matters.

- Weak numerical reasoning: transformer attention wasn’t designed for math-heavy or column-dependent logic.

Both of the above issues are prevalent with tabular data, which often comprises rows containing numerical and textual data, in large amounts. Hence just pasting your Excel sheet to a chatbot will likely have limited utility. The results will not properly consider all the data and any numerical operations will be unreliable.

Note that even if an LLM with a long context window does well in the needle-in-a-haystack test, that performance does not typically transfer well into working with very information dense data like tables. The required capabilities simply are not there.

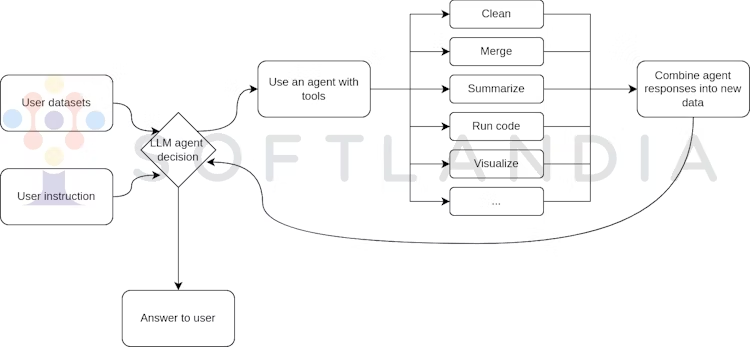

The fix isn’t a bigger model; it’s a smarter workflow. By breaking the task into specialized components, we can scale beyond an LLM’s built-in capability. Agentic systems combine multiple models and tools that handle distinct stages of analysis: parsing, querying, computing, and summarizing. Together, they act as a distributed AI data analyst and interpret large volumes of tabular data more effectively than a single LLM could on its own.

Our agent-based system can process datasets far larger than any single LLM call. Each agent handles a specific skill, like querying structured data, running Python or SQL code, clustering text, training machine learning models or generating summaries. Agents collaborate through a shared task manager that routes data between them and manages task hand-offs. This allows the workflow to stay consistent even when the dataset reaches millions of rows and gives each agent the ability to operate autonomously yet collaboratively.

In practice, our tabular data agents can:

- Clean, merge, and filter large CSV or SQL tables

- Execute code for numeric transformations or aggregation

- Generate summaries and clusters from free-text columns

- Visualize relationships and outliers

- Export results for downstream dashboards or pipelines

The diagram below illustrates the overall functionality of data processing agents:

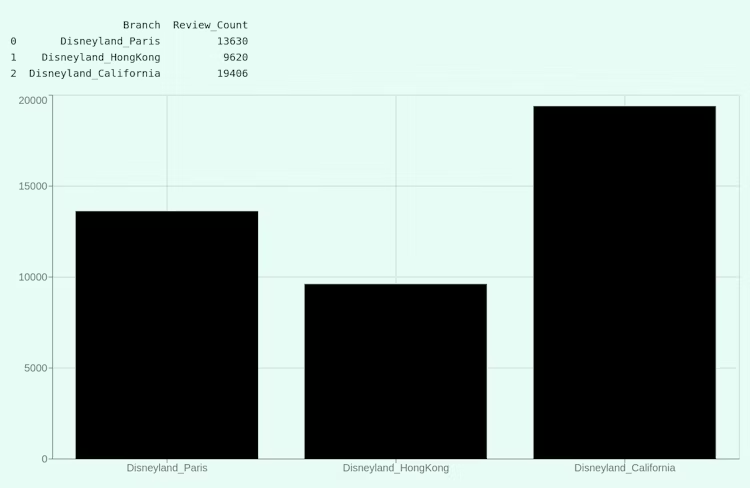

To see this in action, we analyzed 42,000 Disneyland reviews from Kaggle. The dataset mixes text, ratings, and metadata. Exactly the type of hybrid data that breaks traditional LLMs. Using our agents, we handled the full dataset, identified rating biases, extracted review topics, and visualized sentiment patterns that would have been impossible to get by prompting a single model.

The following visualization was created for us:

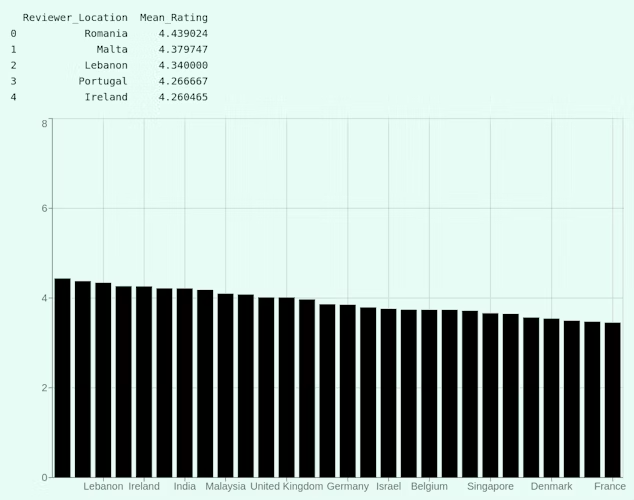

A simple data analysis task that can be answered with the existing columns is to ask which visitors, by their home country, are most satisfied with the Paris Disneyland.

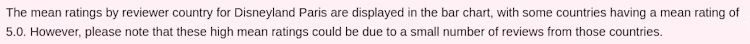

One agent makes a valid point, there are countries with very few reviews in the dataset, which could bias average ratings. Let’s have the agents only consider countries with 50+ reviews.

Seems like the French are not too happy with their amusement Park 🤷

It’s worth emphasizing that just passing the large dataset to an LLM as text would not give good results for the following tasks. Even if a long-context LLM passes the needle-in-a-haystack test (processing sparse data), the type of analysis we are doing here requires understanding very dense data. The attention mechanism of transformer LLMs is not great for such a task. Worse yet, the LLM wouldn’t refuse the assignment, making the result appear valid on the surface. The agentic approach will go through each review to synthesize an answer, making it a more reliable approach.

Let’s ask the agents to give a topic to each rating, so that we can better see what it is about, and let’s also ask which specific attractions were mentioned. For this task, we’ll ask for 1000 random reviews from California Disneyland and proceed with those.

Topics

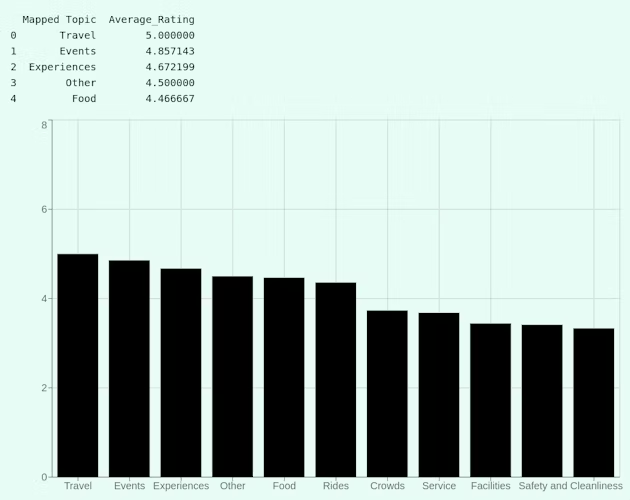

Notice that extracting the topics is not a trivial step. It requires a topic modeling step for free-form text data that considers all of the dataset, and our agents can handle it. Let’s see the review ratings for each review topic that we found:

Interesting! So reviews that were about traveling (only 9 entries, explaining the perfect 5.0) and holding or attending events score rather high, while those that mention service and cleanliness consistently score lower. Not a huge surprise, and further analysis is warranted. The food appears to be tasty!

Experiences and Other are a bit of catch-all columns. Let’s ask the agents to summarize the contents of Experiences topic for each rating from 1 to 5. Again, passing the large dataset to an LLM as text would not give good results. The agentic approach will go through each review to synthesize an answer. Let’s see what happens, below is the LLM analysis:

For the 1-star ratings, reviewers were dissatisfied with the long wait times, crowded conditions, and the lack of Disney vibe at Disneyland. They mentioned that many attractions were closed, and there were no characters out for the kids to take pictures with. Specific attractions like California Screamin' were criticized for poor planning and low visibility. Reviewers also expressed frustration with the dining package not providing any advantage for getting a good view of the shows. Suggestions for improvement included better organization for show seating and reserved areas for dining options. Overall, the 1-star reviews highlighted disappointment with the overall experience and a preference to spend money and time on better experiences.

For the 2-star ratings, visitors commonly dislike long wait times, crowded areas, unavailable attractions, poor quality food, expensive merchandise, and unhelpful staff. Specific attractions like Haunted Mansion, Pirates of the Caribbean, and Splash Mountain are mentioned negatively. Visitors also express disappointment in the lack of magic and organization compared to other Disney parks, particularly Disney World in Florida. Suggestions for improvement include better management of crowds, more diverse and accessible food options, and overall park renovations to enhance the magical experience. On a positive note, some visitors commend the excellent character staff and the day and night parades. However, overall, visitors recommend against the experience unless significant improvements are made.

For the 3 star ratings, reviewers liked the attractions such as the parade, fireworks, and some of the rides. They appreciated the convenience of using the mobile ticket barcode and the availability of water fountains. Some also mentioned the magic and fun experienced by the kids. However, common dislikes included the long queues for both rides and dining, overpriced food and drinks, the crowded and chaotic environment, and the lack of proper maintenance in some areas. Some reviewers mentioned dissatisfaction with the staff's attitude or service. Visitors also express dissatisfaction with the high prices, long waiting times for rides, and the limited choice of places to eat. Suggestions for improvement include better staff attitude, more available rides, and a review of pricing and ticket pass policies to enhance the overall experience.

For the batch of reviews with a rating of 4, visitors express satisfaction with attractions at Disneyland California such as Space Mountain, Haunted Mansion, Pirates of the Caribbean, and Captain Eo. They appreciate the cleanliness of the park, helpful staff, and the experience for children. Common points of dissatisfaction include long waiting lines, expensive food, and souvenirs, as well as the high cost of admission. Visitors suggest improvements in managing waiting times, lowering prices, and extending park hours. Some visitors also express appreciation for the magical experience, memorable attractions, and the attention to detail in the park. However, they also mention high prices for food and souvenirs, and long lineups for popular attractions. Suggestions for improvement include reducing ticket pricing and providing outdoor heaters during cold weather. Specific attractions mentioned include Disneyland, the Indiana Jones ride, and Disney World.

Visitors who give 5-star ratings consistently praise the magical and memorable experience of Disneyland, mentioning specific attractions like Space Mountain, Haunted Mansion, Fantasmic, California Adventure, night shows/fireworks, Pirates of the Caribbean, and Star Tours as particularly enjoyable. They also appreciate the cleanliness of the park, the kindness of the staff, and the fun for all ages. However, some mention long lines, crowded parks, high expenses for food, and high cost of admission as downsides. Suggestions for improvement include better crowd management, managing wait times, reducing food prices, and adding healthier food options. Overall, the general sentiment for the 5-star ratings is overwhelmingly positive, with praise for the attractions and the magical experience.

In every rating category people complain about wait times. It appears that those that arrive with a plan to visit specific attractions are generally satisfied with their experience.

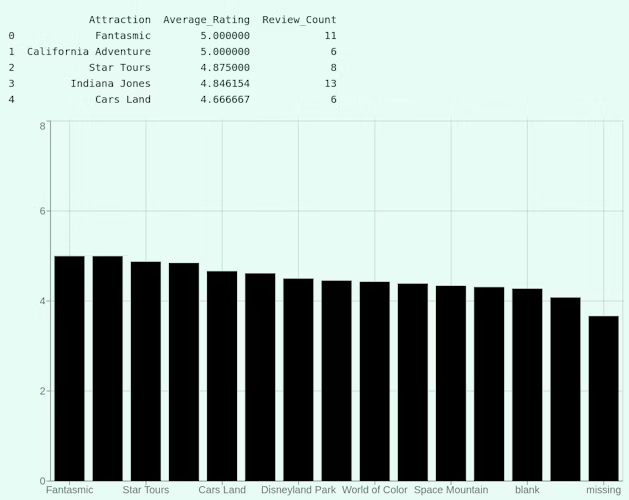

Attractions

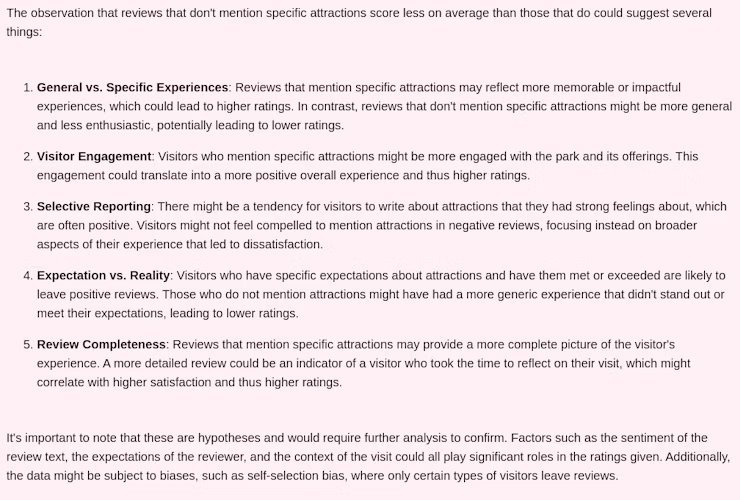

If we look at ratings where a specific attraction or adventure was mentioned, we can see that highest scores were from reviewers that took the time to mention a specific attraction, with lowest ratings from those that don’t mention attractions. This is consistent with our above findings based on the “Experiences” review category:

We are offered the following interpretation about engagement and managing expectations, which could help design advertising campaigns and planning events:

Seems that visitors are generally happy when they have a specific attraction they want to visit, leading to a more fulfilling experience! Perhaps a fact-based advertising campaign about the attractions and best times to visit could be very successful.

Conclusion

A single LLM prompt can appear confident even when it misses most of the data. Agentic workflows prevent that by systematically processing every row. Each step is traceable, so results aren’t just fluent, they’re verifiable.

In this dataset, when agents grouped reviews by topic, one clear theme emerged: planning improves satisfaction. Visitors who mentioned specific attractions scored their experience higher than those who didn’t. The same logic applies to data-driven decision-making: context-rich queries lead to better answers.

For SaaS teams, this approach translates to faster time-to-insight, less manual cleaning, and fewer hallucinated summaries. You can build analytics pipelines that scale beyond siloed data, while keeping a natural language interface for querying. It’s an AI system that grows with you.

Tabular data agents aren’t theoretical. Our clients have had great success with agents, and they’re already reshaping analytics inside fast-scaling companies. As LLMs evolve, these agents will form the building blocks for autonomous reporting, dynamic dashboards, and automated decision support. Start small, define the right use cases, and scale the capabilities as your data grows.

%20copy.jpg)